Posters from 1st BayLDS-Day

The first BayLDS-Day took place on february 10th 2023 in Thurnau. Attached you find information from the different groups and details of their research.

- A Secure Web Application for Evaluating the Safety and Effectiveness of the AI Clinician in Predicting Treatment Dosage for Septic PatientsHide

-

A Secure Web Application for Evaluating the Safety and Effectiveness of the AI Clinician in Predicting Treatment Dosage for Septic Patients

Qamar El Kotob, Matthieu Komorowski, Aldo Faisal

Sepsis is a life-threatening condition that can result from infection and is characterised by inflammation throughout the body. General guidelines are in place for sepsis management but finding optimal doses for individual patients remains a challenge. The use of AI-based decision support systems has the potential to improve patient outcomes and reduce the burden on healthcare providers. Our research group has developed the AI Clinician, an AI model that predicts personalised dosages of vasopressors and fluids for septic patients in ICU. This model, based on lab results, vital signs, and treatment history, has shown promising results in its ability to accurately forecast dosages. In order to further evaluate the performance of the AI Clinician and assess its accuracy, reliability, as well as its potential to improve patient outcomes, a secure web application was created. This user-friendly and intuitive application enables clinicians to access patient information and the AI’s recommendations. By using the web application, we aim to gain a better understanding of the strengths and limitations of the AI Clinician, as well as its potential to improve patient outcomes in real-world clinical settings. In addition to evaluating the AI Clinician, we aim for the application to serve as a tool to support clinicians and help them be more efficient in their decision making. Through this study, we provide valuable insights into the potential of AI to support clinical decision making in the management of sepsis, while refining a system that could serve as a model for similar projects in the future.

- AI in higher education: DABALUGA - Data-based Learning Support Assistant to support the individual study processHide

-

AI in higher education: DABALUGA - Data-based Learning Support Assistant to support the individual study process

Prof. Dr. Torsten Eymann, Christoph Koch, Prof. Dr. Sebastian Schanz, PD Dr. Frank Meyer, Max Mayer M.Sc., Robin Weidlich, Dr. Matthias Jahn

The DABALUGA project aims to support the individual study process through a "digital mentor". Through small-step data collection and analysis during the learning process, difficulties and obstacles are to be identified at an early stage. The data-bases learning support assistant DABALUGA shall give hints to students and thereby reducing the students drop-out rate.

Since 2014, the University of Bayreuth has been organizing the Student Life Cycle with an innovative, data-based Campus Management System (CMS) with various implemented interfaces. The study-related data collected in the CMS and learning management system (LMS) during studies can provide better insights into learning processes. Tasks in the LMS (MOODLE/elearning) must be didactically planned and structured in such a way that the learning process can be captured using AI. Students are contacted and informed directly (with SCRIT). Additional data collection (e.g. about learning type and learning motivation) and evaluation in accordance with data protection regulations is necessary, even if all supervision is voluntary.

AI and historical data analysis (data from previous terms) make it possible to divide large student groups into clusters and to give specific hints. Teaching should respond to students' difficulties and needs at short notice. Thus, the data-based learning support assistant DABALUGA contributes to the improvement of the quality in higher education. - AI in Higher Education: Developing an AI Curriculum for Business & Economics Students Hide

-

AI in Higher Education: Developing an AI Curriculum for Business & Economics Students

Torsten Eymann, Peter Hofmann, Agnes Koschmider, Luis Lämmermann, Fabian Richter, Yorck Zisgen

The deployment of AI in business requires specific competencies. In addition to technical expertise, the business sphere requires specific knowledge of how to evaluate technical systems, embed them in processes, working environments, products and services, and to manage them continuously. This bridge-building role falls primarily to economists as key decision-makers. The target group of the joint project, therefore, encompasses business administration and related (business informatics, buisiness engineering, etc.), which make up a total of approx. 22 % of students. The goal of the joint project is the development and provision of a teaching module kit for AI, that which conveys interdisciplinary AI skills to business students in a scientifically well-founded and practical manner. The modular teaching kit supports teaching for Bachelor's, Master's, Executive Master's students, and doctoral students at universities and universities of applied sciences (UAS). It comprises three elements: (1) AI-related teaching content, which conforms to the background, skills, and interests of the students as well as career-relevant requirements. Therefore, high-quality teaching content is being created and established as Open Education Resources (OER). (2) In the AI training factory, AI content will be developed in collaboration with students in a hands-on manner. (3) An organizational and technical networking platform is being created on which universities, industrial partners, and students can network. The joint project brings together eleven professorships from three universities and one UAS from three German states, who are united in promoting AI competencies among students of economics and business administration. Content and teaching modules are jointly developed, mutually used, and made available to the public. Compared to individual production, this strengthens the range and depth of the offer as well as the efficiency and quality of teaching.

- AI in Higher Education: Smart Sustainability Simulation GameHide

-

AI in Higher Education: Smart Sustainability Simulation Game

Torsten Eymann, Katharina Breiter

Smart Sustainability Simulation Game (S3G) is a student-centered, interactive simulation game with teamwork, gamification, and competition elements. The goal is to create a student-centered and didactically high-quality teaching offer that conveys techno-economic competencies in the context of digitalization and transformation of industrial value chains towards sustainability. The course will be offered in business administration and computer science programs at the participating universities, continued after the end of the project, and made available to other users (OER). Accordingly, the target group consists of master's students of business administration, computer science and courses at the interface (e.g., business and information systems engineering, industrial engineering, digitalization & entrepreneurship). Students learn in interdisciplinary, cross-university groups and find themselves in simulated practical situations in which they contribute and expand their competencies. Particular attention is paid to creating authentic challenge situations in a communication-oriented environment, teamwork, continuous feedback, and the opportunity to experiment. Based on four cases of an industrial value chain in the context of e-mobility, students work through various techno-economic questions relating to data management, data analytics, machine learning, value-oriented corporate decisions, sustainability decisions, strategic management, and data-based business models as realistically and hands-on as possible. For each case, the technical feasibility, economic sense, and sustainability impacts (ecological, social, and economic) are considered. In the sense of problem-based learning, students find solutions to the given problem largely on their own. Teachers merely act in a supporting role.

The project is funded by the "Stiftung Innovation in der Hochschullehre" and is being worked on and carried out jointly by the University of Bayreuth (Prof. Dr. Torsten Eymann), the University of Hohenheim (Prof. Dr. Henner Gimpel) and Augsburg University of Applied Sciences (Prof. Dr. Björn Häckel). The course offering will be carried out for the first time in the summer term 2023.

- Analysing Electrical Fingerprints for Process OptimizationHide

-

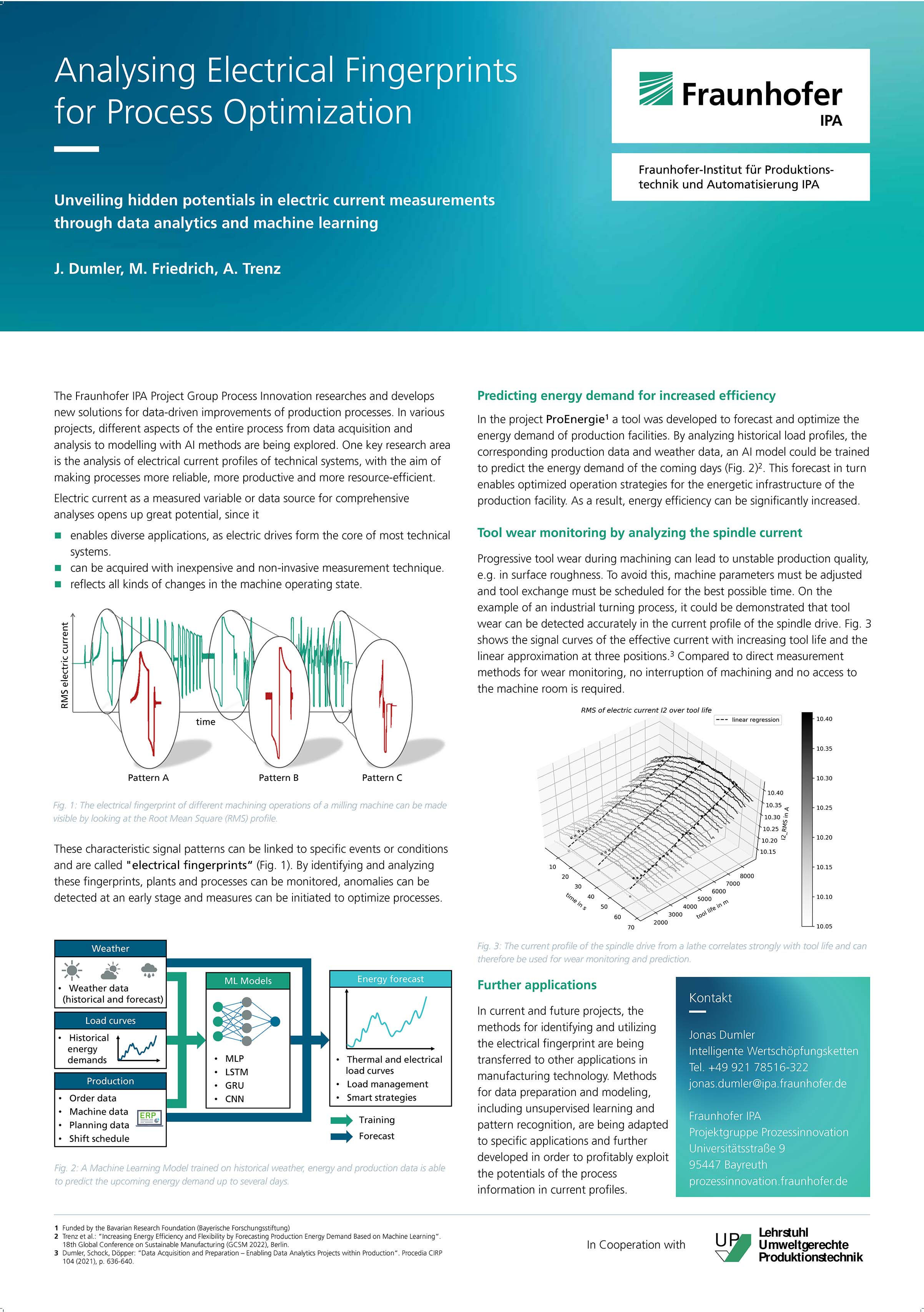

Analysing Electrical Fingerprints for Process Optimization

Jonas Dumler, Markus Friedrich, André Trenz

The Fraunhofer IPA Project Group Process Innovation researches and develops new solutions for the data-driven improvement of production processes. In the research, we consider the entire process from data acquisition and analysis to modelling with machine learning methods. One focus is the analysis of electrical current profiles of technical systems, with the aim of making processes more reliable, more productive and more resource-efficient.

Electric current as a measured variable or data source for comprehensive analyses opens up great potential, as electric drives are at the heart of many technical systems. Moreover, electrical power consumption can be measured using comparatively inexpensive measurement technology and with little installation effort. Different operating states of a machine or a drive are reflected in characteristic signal features, the "electrical fingerprint". By identifying and analysing these characteristics, plants and processes can be monitored, anomalies can be detected at an early stage and suitable measures can be initiated to optimise processes.

Two recent success stories illustrate the possibilities:

1) By analysing historical consumption data of production lines and the associated order data as well as information about the respective weather at the time, it was possible to develop a machine learning model to forecast the energy consumption of the upcoming days. This forecast in turn enables optimised planning of the energy use for the entire building infrastructure of the production facility. As a result, energy efficiency can be significantly increased.

2) In measured current profile of the spindle motor of a CNC milling machine, tool wear can be detected at an early stage so that the optimal timing for a tool change can be predicted. The data acquisition can be done without intervening in the machine room and without interrupting the machining process. - Anchoring as a Structural Bias of Group DeliberationHide

-

Anchoring as a Structural Bias of Group Deliberation

Sebastian Braun, Soroush Rafiee Rad, Olivier Roy

We study the anchoring effect in a computational model of group deliberation on preference rankings. Anchoring is a form of path-dependence through which the opinions of those who speak early have a stronger influence on the outcome of deliberation than the opinions of those who speak later. We show that anchoring can occur even among fully rational agents. We then compare the respective effects of anchoring and three other determinants of the deliberative outcome: the relative weight or social influence of the speakers, the popularity of a given speaker's opinion, and the homogeneity of the group. We find that, on average, anchoring has the strongest effect among these. We finally show that anchoring is often correlated with increases in proximity to single-plateauedness. We conclude that anchoring can be seen as a structural bias that might hinder some of the otherwise positive effects of group deliberation.

- An explainable machine learning model for predicting the risk of hypoxic-ischemic encephalopathy in new-bornsHide

-

An explainable machine learning model for predicting the risk of hypoxic-ischemic encephalopathy in new-borns

Balasundaram Kadirvelu, Vaisakh Krishnan Pulappatta Azhakapath,Pallavi Muraleedharan, Sudhin Thayyil, Aldo Faisal

Background

Around three million babies (10 to 20 per 1000 live births) sustain birth-related brain injury (hypoxic-ischemic encephalopathy or HIE) in low and middle-income countries, particularly in Africa and South Asia every year. More than half of the survivors develop cerebral palsy and epilepsy contributing substantially to the burden of preventable early childhood neuro-disability. The risk of HIE could be substantially reduced by identifying the babies at risk based on the antenatal medical history of the mother and providing better care to the mother during delivery.Methods

As part of the world’s most extensive study on babies with brain injuries (Prevention of Epilepsy by reducing Neonatal Encephalopathy study), we collected data from 38,994 deliveries (750 being HIE) from 3 public maternity hospitals in India. We have developed an explainable machine-learning model (using a gradient-boosted ensemble approach) that can predict the risk of HIE from ante-natal data available at the time of labour room admission. The model is trained on a dataset of 205 antenatal variables (113 being categorical variables). We used a cost-sensitive learning technique and a balanced ensemble approach to handling the strong class imbalance (1:52).Results

The ensemble model achieved an AUC score of 0.7 and a balanced accuracy of 70% on the test data and fares far better than clinicians in making an HIE prediction. A SHAP framework was used to interpret the trained model by focusing on how salient factors affect the risk of HIE. A model trained on the 20 most important features is available as a web app at brainsaver.org.Conclusion

Our model relies on clinical predictors that may be determined before birth, and pregnant women at risk of HIE might be recognised earlier in childbirth using this approach. - An Optimal Control Approach to Human-Computer InteractionHide

-

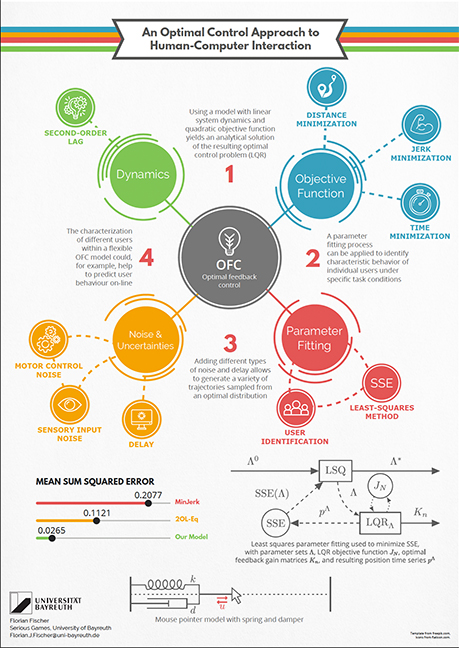

An Optimal Control Approach to Human-Computer Interaction

Florian Fischer, Markus Klar, Arthur Fleig, Jörg Müller

We explain how optimal feedback control (OFC), a theory used in the field of human motor control, can be applied to understanding Human-Computer Interaction.

We propose that the human body and computer dynamics can be interpreted as a single dynamical system. The system state is controlled by the user via muscle control signals, and estimated from sensory observations. We assume that humans aim at controlling their body optimally with respect to a task-dependent cost function, within the constraints imposed by body, environment, and task. Between-trial variability arises from signal-dependent control noise and observation noise.

Using the task of mouse pointing, we compare different models from optimal control theory and evaluate to what degree these models can replicate movements. For more complex tasks such as mid-air pointing in Virtual Reality (VR), we show how Deep Reinforcement Learning (DRL) methods can be leveraged to predict human movements in a full skeletal model of the upper extremity. Our proposed "User-in-the-Box" approach allows to learn muscle-actuated control policies for different movement-based tasks (e.g., target tracking or choice reaction), using customizable models of the user's biomechanics and perception.We conclude that combining OFC/DRL methods with a moment-by-moment simulation of the dynamics of the human body, physical input devices, and virtual objects, constitutes a powerful framework for evaluation, design, and engineering of future user interfaces.

- Application of ML models to enhance the sustainability of polymeric materials and processesHide

-

Application of ML models to enhance the sustainability of polymeric materials and processes

Rodrigo Q. Albuquerque, Tobias Standau, Christian Bruettting, Holger Ruckdaeschel

This work highlights some digitization activities in the Polymer Engineering Department related to the application of machine learning methods (ML) to predict properties of polymer materials and related processing parameters in their production. Training of supervised ML models (e.g., Gaussian processes, support vector machine, random forests) has been used to understand the relationships between general variables and various target properties of the systems under study. Understanding these relationships also aids in the intelligent development of novel materials and efficient processes, ultimately leading to improvements in the sustainability of the materials produced.

- ArchivalGossip.com. Digital Recovery of 19th-Century Tattle TalesHide

-

ArchivalGossip.com. Digital Recovery of 19th-Century Tattle Tales

Katrin Horn, Selina Foltinek

ArchivalGossip.com is the digital outlet of the American Studies research project “Economy and Epistemology of Gossip in Nineteenth-Century US American Culture” (2019-2022). Examining realist fiction, life writing, newspaper articles, and magazines, the project seeks to answer the questions of what and how gossip knows, and what this knowledge is worth. The digital part of the project collects letters, diaries, photographs and paintings, auto/biographies, and newspaper articles as well as information on people and events. Since gossip relies on networks, the project needs to store, sort, annotate, and visualize multiple documents, as well as illustrate their relation to each other. To this end, the team set up an Omeka database (ArchivalGossip.com/collection) that is freely available to the public and based on transcriptions and annotations of hundreds of primary sources from the nineteenth century. Additionally, we have built a WordPress website (ArchivalGossip.com) to add information on sources and archives to help contextualize our own research (bibliographies, annotation guidelines), and created blog posts to accompany the digital collections.

The database enables users to trace relations between people (e. g. “married to,” “friends with”) and references (e. g. “mentions,” “critical of,” “implies personal knowledge of”) in intuitive relationship-visualizations. The database and its diverse plugins furthermore facilitate work with different media formats and tracing relational agency of female actors in diverse ways: chronologically (timelines: Neatline Time), geospatially (Geolocation mapping tool), and according to research questions (exhibits, tags). The project furthermore uses DH tools such as Palladio to visualize historical data, for example, by providing graphs with different node sizes that display historical agents who participate in the exchange of correspondence. Metadata is collected according to Dublin Core Standards and additional, customized fields of item-type-specific information. Transcription of manuscript material is aided via the AI-supported tool Transkribus.

For more information, see: https://handbook.pubpub.org/pub/case-archival-gossip - Artificial intelligence as an aspect of computer science teaching in schoolsHide

-

Artificial intelligence as an aspect of computer science teaching in schools

Matthias Ehmann

We deal with artificial intelligence as part of computer science classes for schools. We develop teaching and learning scenarios for school students and teachers to impart artificial intelligence skills.

Artificial intelligence becomes a compulsory part of computer science classes for all students at Bavarian high schools this year. From March 2023, together with the State Ministry for Education and Culture, we will be running a three-year in-service training program for teachers at Bavarian high schools in order to qualify them professionally and didactically. As part of the training, we record and evaluate changes in teachers’ attitudes towards artificial intelligence.Since 2018 we have been conducting workshops for school students about artificial intelligence and autonomous driving at the TAO student research center. The students develop a basic understanding of artificial intelligence issues. They conduct their own experiments on decision-making with the k-nearest-neighbor method, artificial neurons and neural networks. They recognize the importance of data, data pre-processing and hyperparameters for the quality of artificial intelligence systems. They discuss opportunities, limits and risks of artificial intelligence.

We record and evaluate changes in attitudes of the participants towards artificial intelligence. - Bi-objective optimization to mitigate food quality losses in last-mile distributionHide

-

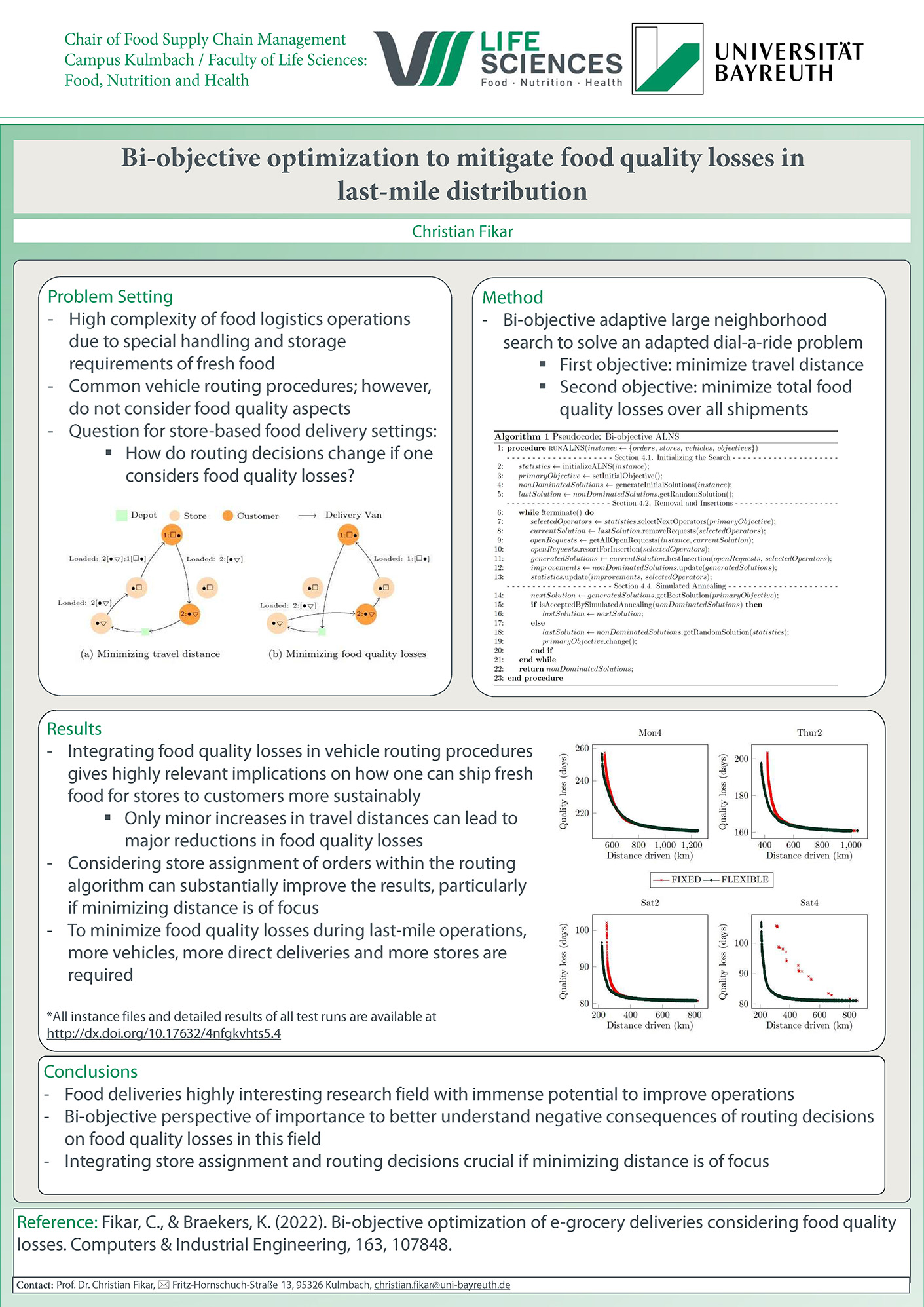

Bi-objective optimization to mitigate food quality losses in last-mile distribution

Christian Fikar

Food supply chain management is challenged by various uncertainties present in daily operations such as fluctuation in supply and demand as well as varying product qualities along production and logistics. Related processes are highly complex as various product characteristics need to be considered simultaneously to ship products from farm to fork on time and in the right quality. To contribute to a reduction in food waste and losses, this poster provides an overview of recent operations research activities of the Chair of Food Supply Chain Management in the context of last-mile deliveries of perishable food items. It introduces a bi-objective metaheuristic solution procedure to optimize home delivery of perishable food from stores to customers’ premises. A special focus is set on the consideration of quality aspects as well as on deriving strategies and implications to facilitate more sustainable operations in the future.

- Bioinformatics in plant defence researchHide

-

Bioinformatics in plant defence research

Anna Sommer1, Miriam Lenk2, Daniel Lang3, Claudia Knappe2, Marion Wenig2, Klaus F. X. Mayer3, A. Corina Vlot1

1University of Bayreuth, Faculty of Life Sciences: Food, Nutrition and Health, Chair of Crop Plant Genetics, Fritz-Hornschuch-Str. 13, 95326 Kulmbach, Germany.

2Helmholtz Zentrum München, Institute of Biochemical Plant Pathology, Neuherberg, Germany

3Helmholtz Zentrum München, Institute of Plant Genome and Systems Biology, Neuherberg, GermanyIn our group, we work on the genetic basis of systemic resistance in crop plants including wheat, barley, and tomato. To this end, we facilitate different computational analysis methods to evaluate changes, for example in gene expression patterns or microbiome composition. We work mainly on quantification and differential gene expression analysis of RNAseq data, working for example with DESeq2. While we have so far used commercial services, we aim to integrate quality control, pre-processing, and alignment of genes by utilizing FastQC, Bowtie2 or HISAT2 in our own data analysis pipeline. Regarding the microbiome of plants, we analyse 16S amplicon data utilizing Phyloseq as well as DESeq2.

In search of new plant immune regulators, we actively create plant mutants which are deficient in certain defence-associated genes via CRISPR/Cas9 genome editing. To improve this method, we evaluate mutation efficiency of CRISPR/Cas elements, in particular the guideRNA. To this end, we simulate secondary structures of guideRNA sequences and evaluate the complementarity between guideRNA and target sequences.

Monocotyledonous plants, including the staple crop wheat, present a specific challenge, since they have an especially large genome with over 17 Gb (five times the size of a human genome) and highly repetitive regions. In addition, the hexaploid chromosome set of wheat includes three subgenomes (each diploid) with more than 97% homology between the subgenomes, which adds its own particular challenges to working with these genomes.

Our overarching aim is to reduce the use of pesticides by strengthening the plans own defence mechanisms. To this end we aim to deepen our knowledge of plant signalling upon interaction with microbes, be it pathogenic or beneficial. - Biomechanical Simulations for Sport ProductsHide

-

Biomechanical Simulations for Sport Products

Michael Frisch, Tizian Scharl, Niko Nagengast

In the field of biomechanics and sports technology, simulation is a proven means of making predictions in the movement process or in product development.

On the one hand, software for modelling, simulating, controlling, and analysing the neuromusculoskeletal system is used at the Chair of Biomechanics to investigate possible muscular differences in different sports shoes. On the other hand, the process chain of virtual product development, consisting of computer-aided engineering (CAD), finite element analysis (FEA), structural optimisation and fatigue simulation, is used to find innovative solutions for sports products.For both digital methods, however, it is essential to carry out biomechanical analyses in advance using motion capturing, inertial measurement sensors and/or force measurement units, among other things, in order to record real boundary conditions and better understand motion sequences.

- Black holes, pulsations, and damping: a numerical analysis of relativistic galaxy dynamicsHide

-

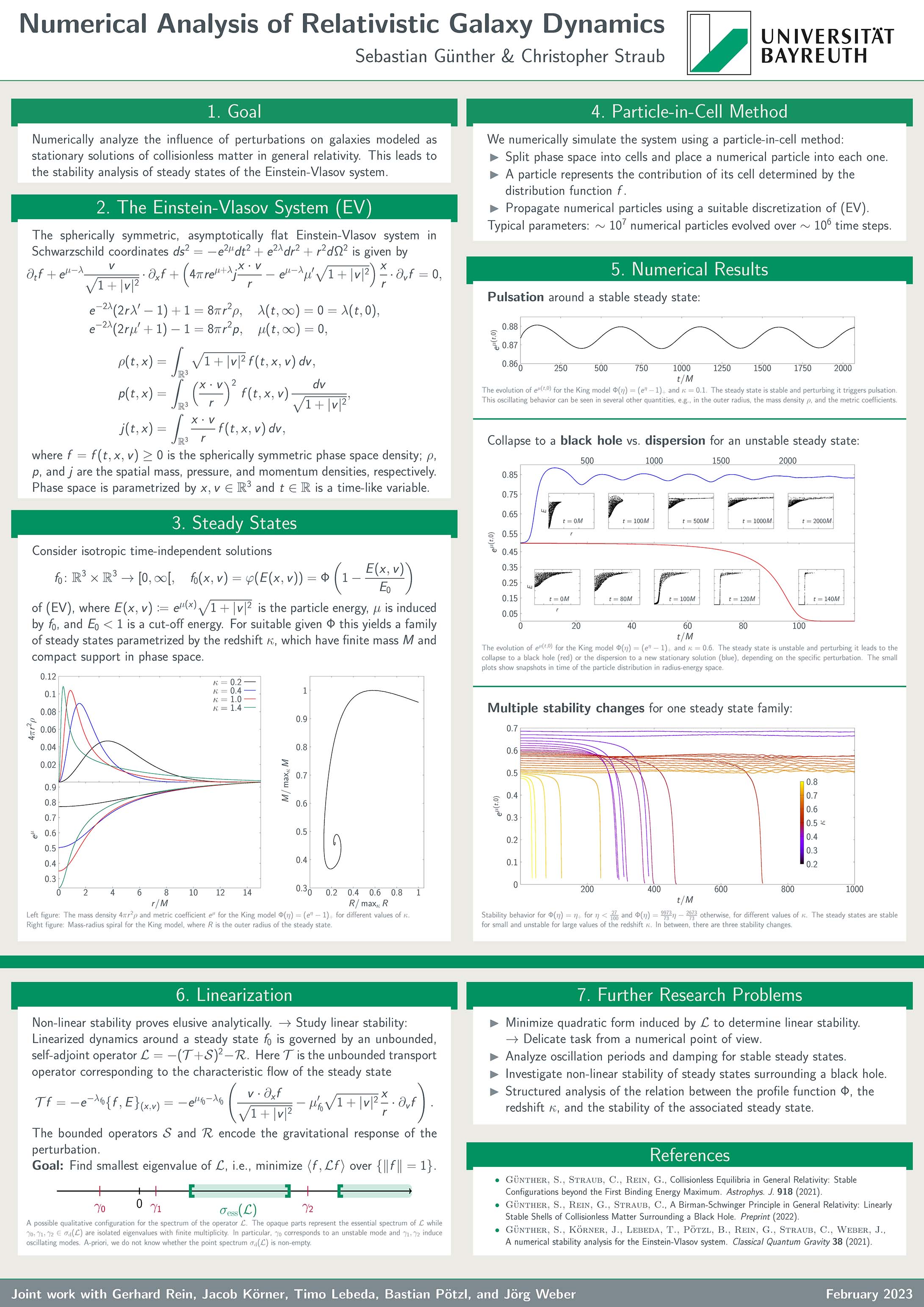

Black holes, pulsations, and damping: a numerical analysis of relativistic galaxy dynamics

Sebastian Günther, Christopher Straub

We analyse the dynamics of star clusters or galaxies modeled by the Einstein-Vlasov system, which describes the evolution of the phase space density function of the configuration.

Of particular interest is the stability behaviour of equilibria: We find that perturbing a steady state can lead to pulsations, damping, collapse to a black hole, or dispersion.

We numerically simulate various aspects of this system in order to verify the validity of mathematical hypotheses and to develop new ones.

Firstly, we use a particle-in-cell method to determine the non-linear stability of equilibria numerically and find that it behaves differently then previously thought in the literature.

Secondly, the spectral properties of the steady states are of interest in order to find oscillating and exponentially growing modes. This leads to a difficult optimization problem of an unbounded operator.

In both of these numerical issues, we mainly rely on "brute-force" algorithms which probably allow for a lot of improvement. - Blaming the Butler? - Consumer Responses to Service Failures of Smart Voice-Interaction TechnologiesHide

-

Blaming the Butler? - Consumer Responses to Service Failures of Smart Voice-Interaction Technologies

Timo Koch, Dr. Jonas Föhr, Prof. Dr. Claas Christian Germelmann

Marketing research has consistently demonstrated consumers’ tendency to attribute human-like roles to smart voice-interaction technologies (SVIT) (Novak and Hoffman 2019; Schweitzer 2019; Foehr and Germelmann 2020). However, smart service research has largely ignored the effect of such role attributions on service evaluations (Choi et al. 2021). Hence, we explore, how (failed) service encounters with SVIT affect consumers’ service satisfaction and usage intentions when role attributions are considered. Understanding such effects can help marketers to design more purposeful smart services.

We conducted a series of two scenario-based online experiments employing a Wizard of Oz approach. Thus, we manipulated role attribution by semantic priming in the first and by verbal priming in the second study and differentiated between a successful service, a mild, and a strong service failure. In the scenarios, consumers engaged in fictitious service encounters with SVIT, in which they vocally commanded the SVIT what to do whereas the SVIT answered verbally. A pretest in each study confirmed the success of our manipulations so that the tested scenarios could be employed in our main studies.

The results show that service failures significantly affected both consumers’ service satisfaction and usage intention. Nevertheless, we found contrary results to previous studies regarding the moderating influence of role attributions. Thus, we found preliminary evidence that service failures by SVITs are more likely to be excused if SVITs are construed as masters, and not as servants. However, strong manipulation was needed to let the consumers perceive the SVIT as a master or as a servant. Hence, future research should examine under which conditions consumers are affected by different role attributions to SVIT.

- Collective variables in complex systems: from molecular dynamics to agent-based models and fluid dynamicsHide

-

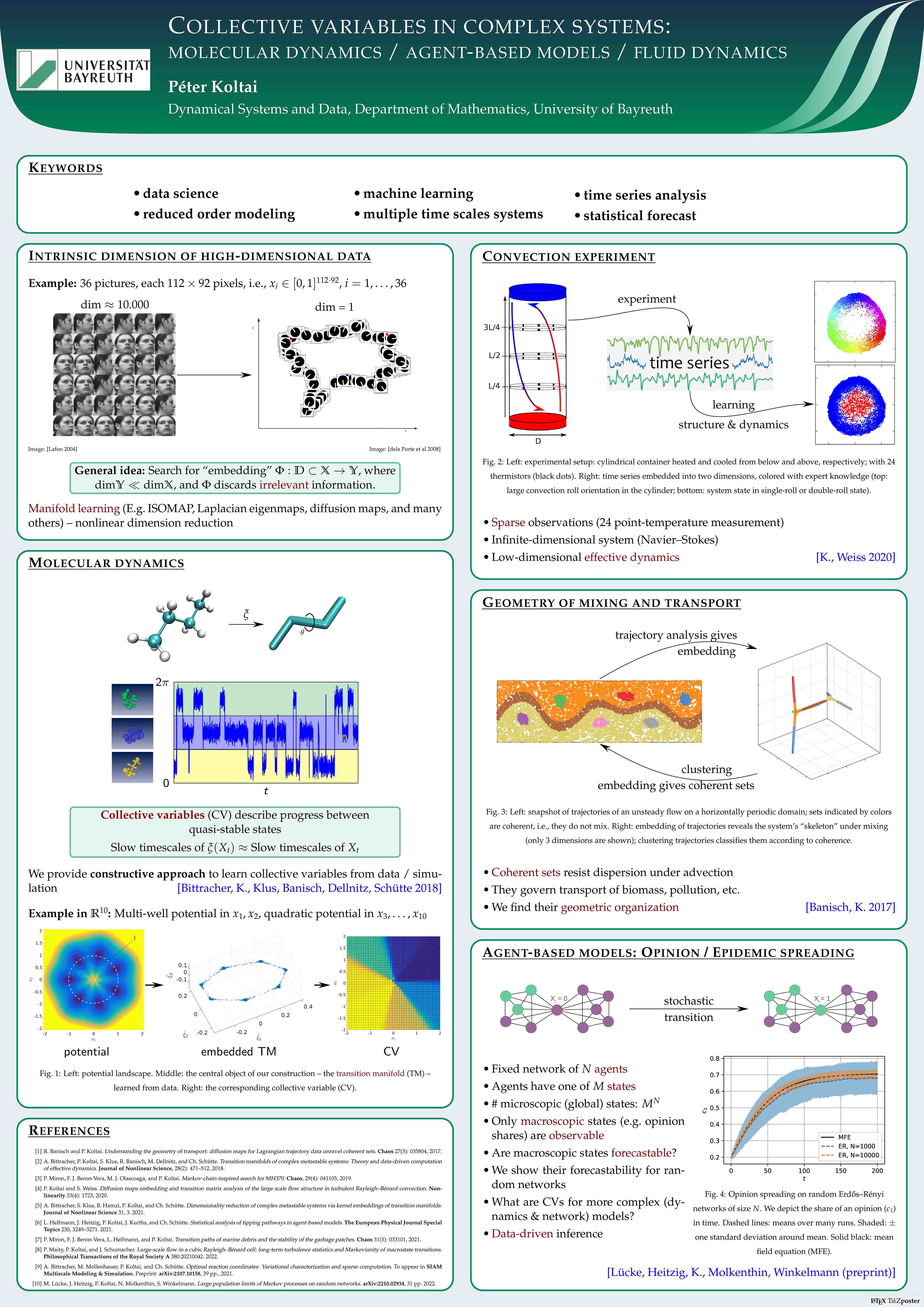

Collective variables in complex systems: from molecular dynamics to agent-based models and fluid dynamics

Péter Koltai

The research of the Chair for Dynamical Systems and Data focuses on the data-driven analysis and forecast of complex (dynamical) systems. One particular aspect is reduced order modeling of such systems by developing new tools on the interface of dynamical systems, machine learning and data science. This presentation summarizes some recent projects in this direction, with various application across different scientific fields:

The identification of persistent forecastable structures in complicated or high-dimensional dynamics is vital for a robust prediction (or manipulation) of such systems in a potentially sparse-data setting. Such structures can be intimately related to so-called collective variables known for instance from statistical physics. We have recently developed a first data-driven technique to find provably good collective variables in molecular systems. Here we will discuss how the concept generalizes to other applications as well, such as fluid dynamics and social or epidemic dynamics.

- Computer Vision for automated tool wear monitoring and classificationHide

-

Computer Vision for automated tool wear monitoring and classification

Markus Friedrich, Theresa Gerber, Jonas Dumler, Frank Döpper

In the manufacturing industry, one of the biggest tasks is to act in the sense of sustainability and to avoid the waste of resources. In this sense, there is great potential in reducing the renewal of production tools by optimizing replacement intervals. Especially in the field of machining, tools are often replaced according to fixed intervals or empirically determined intervals, regardless of actual wear. Thus, the need arises for a way to efficiently monitor the wear condition and predict the remaining life of tools.

A system for automatic monitoring and classification of the wear of face milling tools was developed at the chair. A direct method in the form of optical monitoring was used. Due to advances in camera technology and artificial intelligence, excellent results could be achieved by using a computer vision approach without resorting to expensive camera technology. Due to the compact design, both online and offline operation are possible. The use of low-cost camera technology and an efficient algorithm allow the retrofitting into existing machines and is therefore also attractive for small and medium-sized enterprises.

- Constraint-based construction of geometric representationsHide

-

Constraint-based construction of geometric representations

Andreas Walter

My research related to my PhD thesis in the field of mathematics and computer science relates to the optimization of equations representing geometric systems. The software sketchometry (www.sketchometry.org) offers a simple tool for creating geometric constructions with your finger or mouse. These constructions are built up hierarchically, as with analog constructions. This means that certain construction steps have to happen before others in order to create the finished construction. The constraint-based geometry approach follows a different procedure. Here the user has a given set of generic objects that he can restrict with constraints. These constraints can be understood as equations whose system has to be optimized in an efficient way within a certain framework. The runtime of the algorithm in particular plays a central role here.

The goal of my project is a software that is constraint-based in design, but is as easy to use as sketchometry. If the user changes his construction after defining the constraints, for example by dragging a point, the constraints should continue to be fulfilled. The optimization required for this does not yet have the desired performance. - Context-Aware Social Network AnalysisHide

-

Context-Aware Social Network Analysis

Mirco Schönfeld, Jürgen Pfeffer

We are surrounded by networks. Often we are part of these networks ourselves, for example with our personal relationships. Often the networks are not visible per se, for example because the structures first have to be extracted from other data to be accessible.

Regardless of the domains from which the networks originate, structural information is only one part of the model of reality: each network, each node, and each connection within are subject to highly individual contextual circumstances. Such contextual information contains rich knowledge. They can enrich the structural data with explanations and provide access to semantics of structural connections.

This contrasts with the classical methods of network analysis, which indeed produce easily accessible and easily understandable results. But, so far, little attention can be payed to contextual information. This contextual information has yet to be made accessible to algorithms of network analysis.

This talk therefore presents methods for processing contextual information in network analysis using small and big data as examples. On the level of manageable data sets, for example, new types of centrality rankings can be derived. Here, context introduces possible constraints and acts as an inhibitor for the detection of paths. Consequently, individual views of single nodes on the global network emerge.

On the level of very large data sets, the analysis of contextually attributed networks offers new approaches in the field of network embedding. The resulting embeddings contain valuable information about the semantics of relationships.

The goal of context-aware social network analysis is, on the one hand, to benefit from the diverse knowledge that lies dormant in contextual information. On the other hand, novel explanations for analysis results are to be opened up and made accessible.

- Data-derived digital twins for the discovery of the link between shopping behaviour and cancer riskHide

-

Data-derived digital twins for the discovery of the link between shopping behaviour and cancer risk

Fabio S Ferreira, Suniyah Minhas, James M Flanagan, Aldo Faisal

Early cancer prevention and diagnostics are typically based on clinical data, however behavioural data, especially consumer choices, may be informative in many clinical settings. For instance, changes in purchasing behaviour, such as an increase in self-medication to treat symptoms without understanding the root cause, may provide an opportunity to assess the person’s risk of developing cancer. In this study, we used probabilistic machine learning methods to identify data-derived Digital Twins of patients' behaviour (i.e., latent variable models) to investigate novel behavioural indicators of risk of developing cancer, discoverable in mass transactional data for ovarian cancer, for which it is known that non-specific or non-alarming symptoms are present, and are thus likely to affect purchasing behaviour. We applied Latent Dirichlet Allocation (LDA) to the shopping baskets of loyalty shopping card data of ovarian cancer patients with over two billion anonymised transactional logs to infer topics (i.e., topics) that may describe distinct consumers’ shopping behaviours. This latent continuous representation (the Digital Twin) of the patient can then be used to detect changepoints that may indicate early diagnosis, and therefore increase a patient’s chances of survival. In this study, we compared two machine learning models to identify changepoints on synthetic data and ovarian cancer data: the Online Bayesian Changepoint detector (unsupervised method) and a Long Short-Term Memory (LSTM) network (supervised method). Our approaches showed good performance in identifying changepoints in artificial shopping baskets, and promising results in the ovarian cancer dataset, where future work is needed to account for potential external confounders and the limited sample size.

- Deep Learning in BCI DecodingHide

-

Deep Learning in BCI Decoding

Xiaoxi Wei

Deep learning has been successful in BCI decoding. However, it is very data-hungry and requires pooling data from multiple sources. EEG data from various sources decrease the decoding performance due to negative transfer. Recently, transfer learning for EEG decoding has been suggested as a remedy and become subject to recent BCI competitions (e.g. BEETL), but there are two complications in combining data from many subjects. First, privacy is not protected as highly personal brain data needs to be shared (and copied across increasingly tight information governance boundaries). Moreover, BCI data are collected from different sources and are often based on different BCI tasks, which has been thought to limit their reusability. Here, we demonstrate a federated deep transfer learn- ing technique, the Multi-dataset Federated Separate-Common- Separate Network (MF-SCSN) based on our previous work of SCSN, which integrates privacy-preserving properties into deep transfer learning to utilise data sets with different tasks. This framework trains a BCI decoder using different source data sets obtained from different imagery tasks (e.g. some data sets with hands and feet, vs others with single hands and tongue, etc). Therefore, by introducing privacy-preserving transfer learning techniques, we unlock the reusability and scalability of existing BCI data sets. We evaluated our federated transfer learning method on the NeurIPS 2021 BEETL competition BCI task. The proposed architecture outperformed the baseline decoder by 3%. Moreover, compared with the baseline and other transfer learning algorithms, our method protects the privacy of the brain data from different data centres.

- Digital data ecosystems for the verification of corporate carbon emission reportingHide

-

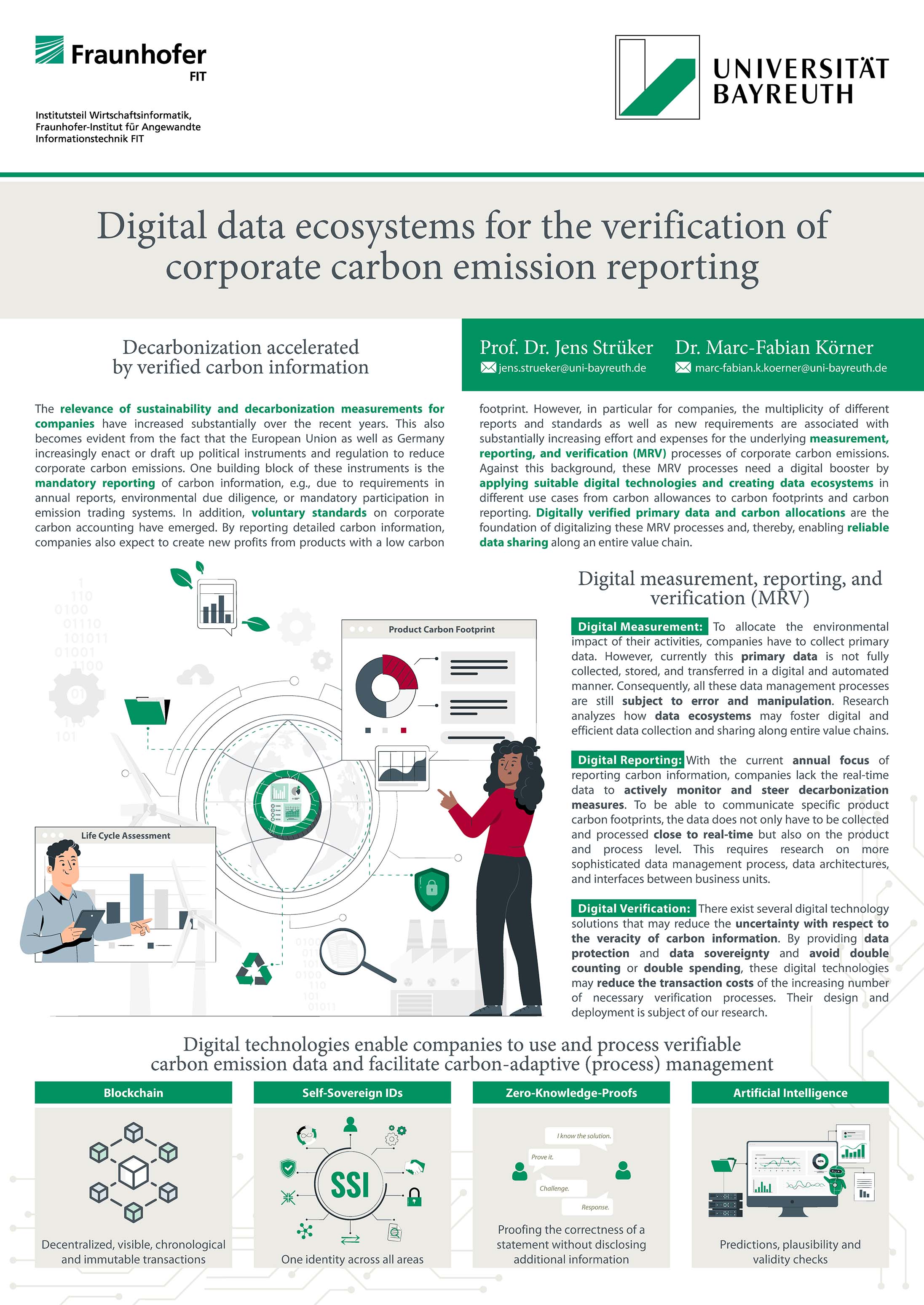

Digital data ecosystems for the verification of corporate carbon emission reporting

Marc-Fabian Körner, Jens Strüker

Carbon taxes and emission trading systems have proven to be powerful decarbonization instruments; however, achieving our climate goals requires to reduce emissions drastically. Consequently, the EU has adopted regulatory measures, e.g., the expansion of the ETS or the carbon border adjustment mechanism (CBAM), which will require carbon emissions to be managed and priced more precisely. However, this capability is accompanied by unprecedented challenges for companies to report their carbon emissions in a more fine-granular and verifiable manner. These requirements call for an integrated and interoperable systems that can track carbon emissions from cradle to grave by enabling a digital end-to-end monitoring, reporting, and verification tooling (d-MRV) of carbon emissions across value chains to different emitters. To establish such systems, our research proposes recent advances in digital science as central key-enablers. In detail, our research aims to design digital data ecosystems for the verification of corporate carbon emission reporting that accounts for regulatory requirements (cf. CBAM and EU-ETS) and that prevents greenwashing and the misuse of carbon labels that are currently highly based on estimations. Accordingly, it will be possible to digitally verify and report a company’s – and even a specific product’s – carbon footprint. Therefore, we apply several digital technologies that fulfill different functions to enable digital verification: While distributed ledger technologies, like Blockchains, may be used as transparent registers – not for the initial data but for the proof of correctness of inserted data – zero-knowledge proofs and federated learning may be applied for the privacy-preserving processing of competition-relevant data. Moreover, a digital identity management may be used for the identification of machines or companies and for governance and access management. Against this background, we also refer to data spaces as corresponding reference architectures. Overall, we aim to develop end-to-end d-MRV solutions that are directly connected to registries and the underlying infrastructure.

- Digital Science in Business Process ManagementHide

-

Digital Science in Business Process Management

Maximilian Röglinger, Tobias Fehrer, Dominik Fischer, Fabian König, Linda Moder, Sebastian Schmid

Business Process Management (BPM) is concerned with the organization of work in business processes. To this end, processes are defined, modelled, and continuously analysed and improved. With the increasing digitization and system support of process execution in recent years, new opportunities for applying novel data-driven BPM methods for process analysis and improvement have emerged. Research in this field aims to gain insight from process execution data and translate it into actionable knowledge. On the insight perspective, our research focuses on how to extract process behaviours from semi-structured and unstructured data such as videos or raw sensor data, and applies machine learning to detect patterns in workflows. In addition, we apply generative machine learning techniques to improve the data quality of raw data from process execution for subsequent analysis. Another example is the application of natural language processing techniques for this purpose. Focusing on the conversion from insight into action, experts are increasingly using this data for analyses with the help of process mining. In knowledge- and creativity-intensive projects, process improvement options are then derived and evaluated for their value. In our research, we have explored approaches to support the search for better process models through technology: In an assisted approach, we automatically search for process improvement ideas and compare different redesign options via simulation experiments, so that users are supported in their decision making. In another approach, we use Generative Adversarial Networks to propose entirely new process models and stimulate human creativity. Through this selection of work, it becomes apparent that BPM can benefit in many ways from digital technologies and, therefore, there are still numerous opportunities to explore.

- Digital Science in histological analysisHide

-

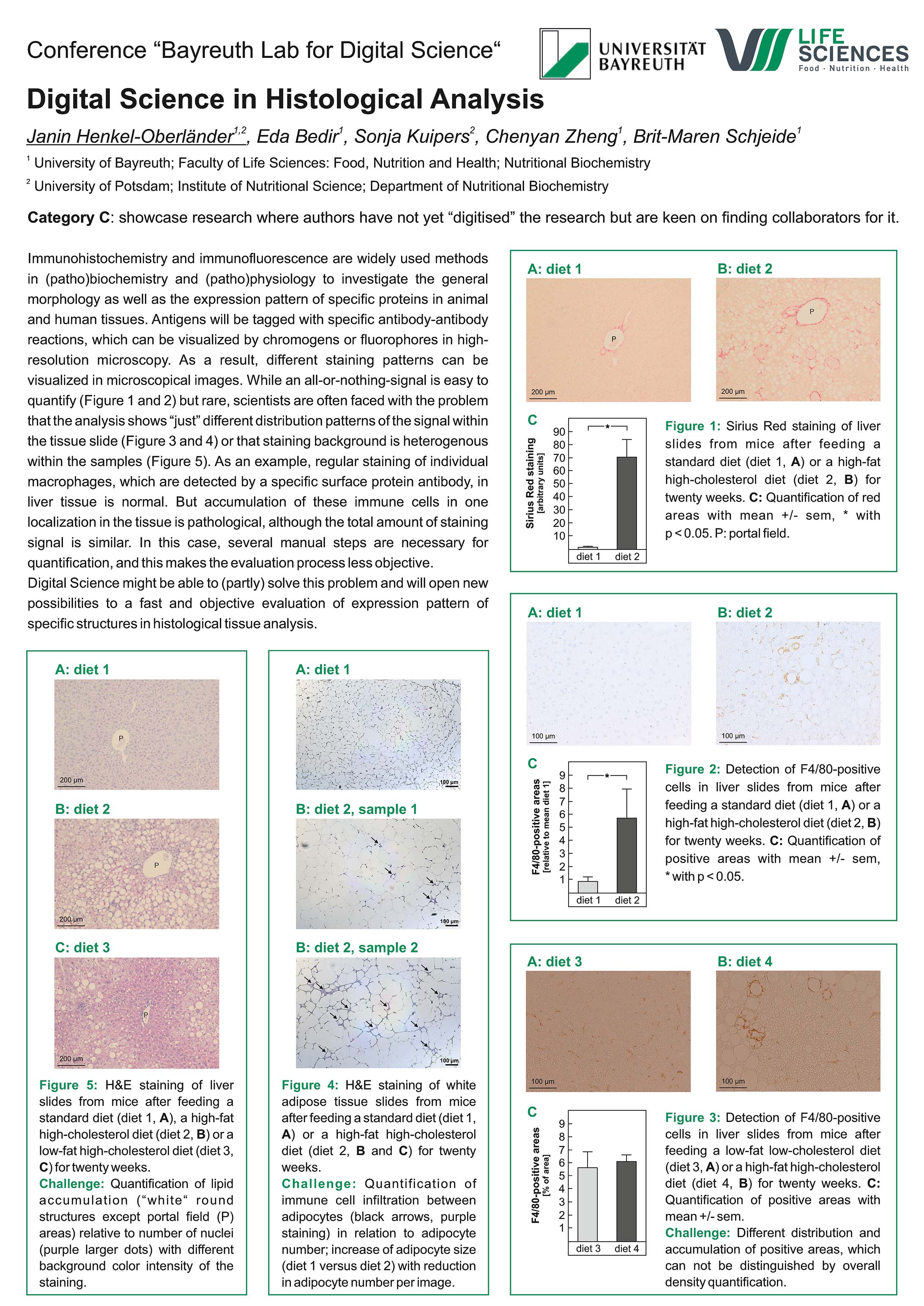

Digital Science in histological analysis

Janin Henkel-Oberlaender, Eda Bedir, Chenyan Zheng, Brit-Maren Schjeide

Category c: showcase research where authors have not yet “digitised” the research but are keen on finding collaborators for it.

Immunohistochemistry and Immunofluorescence are widely used methods in (patho)biochemistry and (patho)physiology to investigate the general morphology as well as the expression pattern of specific proteins in animal and human tissues. Antigens will be tagged with specific antibody-antibody reactions, which can be visualized by chromogens or fluorophores in high-resolution microscopy. As a result, different staining pattern can be visualized in microscopical images. While an all-or-nothing-signal is easy to quantify but rare, scientists are often faced with the problem that the analysis shows “just” different distribution patterns of the signal within the tissue slide. As an example, regular staining of individual macrophages, which are detected by a specific surface protein antibody, in liver tissue is normal. But accumulation of these immune cells in one localization in the tissue is pathological, although the total amount of staining signal is similar. In this case, several manual steps are necessary for quantification, and this makes the evaluation process less objective.

Digital Science might be able to (partly) solve this problem and will open new possibilities to a fast and objective evaluation of expression pattern of specific structures in histological tissue analysis. - Digitization of Product Development and RecyclingHide

-

Digitization of Product Development and Recycling

Niko Nagengast, Franz Konstantin Fuss

Digitization and automation can accelerate the value creation of performance, cost, and sustainability. During all phases of a product’s Life Cycle, algorithmic techniques can be applied to create, optimize and evaluate products or processes. With the technological and commercial maturity of Additive Manufacturing, an extension of design and material opportunities can be explored and lead towards potentials of innovation in all sectors of the industry. A fusion of both generates a rethinking of material search and substitution, a different geometrical approach of design, and various possibilities in manufacturing.

In considerations of the magnitude of freeform geometries, a time-wise short-term implementation of new materials (relative to other fields), a strive towards lightweight products, and a flexible prototype-like character, the sport and sport-medical industry seems quite promising to set benchmarks or proof-of-concepts regarding innovative workflows: digital concept and detail design, fabrication, reengineering, evaluation, and decision making.

The proposed work contains a cross-section of case studies and concepts to combine digital approaches reaching from parametric form-finding opportunities of surfboard fins, a material modelling approach towards sustainable and anisotropic product design using Fused Filament Fabrication (FFF), a guideline approach for the substitution of conventional parts through AM using multi-criteria-decision making, and a KI-driven, databased search flow for a sustainable material implementation.The outcome clearly shows the potential to entwine various algorithmic designs with AM. Furthermore, it shall inspire others to implement and scale these or more profound strategies in their academical or industrial field of expertise.

- Discovery Space: An AI-Enhanced Classroom for Deeper Learning in STEMHide

-

Discovery Space: An AI-Enhanced Classroom for Deeper Learning in STEM

Franz X. Bogner, Catherine Conradty

Traditional assessments of cognitive skills and knowledge acquisition are in place in most educational systems. These approaches though are not harmonized with the innovative and multidisciplinary curricula proposed by current reforms focusing on the development of 21st century skills that require in-depth understanding and authentic application. This divergence must be addressed if STEM education is to become a fulfilling learning experience and an essential part of the core education paradigm everywhere. Discovery Space based on rooted research in the field and long-lasting experience employing ICT-based innovations in education is proposing the development of a roadmap for the AI-Enhanced Classroom for Deeper Learning in STEM that facilitates the transformation of the traditional classroom to an environment that promotes the scientific exploration and monitors and supports the development of key skills for all students. The project starts with a foresight research exercise to increase the understanding of the potential, opportunities, barriers, accessibility issues and risks of using emerging technologies (AI-enabled assessment systems combined with AR/VR interfaces) for STEM teaching, considering at the same time a framework for the sustainable digitization of education. The project designs an Exploratory Learning Environment (Discovery Space) to facilitate students’ inquiry and problem-solving while they are working with virtual and remote labs. This enables AI-driven lifelong learning companions to provide support and guidance and with VR/AR interfaces to enhance the learning experience, to facilitate collaboration and problem solving. It also provides Good Practices of Scenarios and pilots to equip teachers and learners with the skills necessary for the use of technology appropriately (the Erasmus+ project’s lifetime: 2023-2015).

- Discrete OptimizationHide

-

Discrete Optimization

Dominik Kamp, Sascha Kurz, Jörg Rambau, Ronan Richter

Optimization is one of most influential digital methods of the past 70 years. In the most general setting, optimization seeks to find a best option out of a set of feasible options. Very often, options consist of a complex combination of atomic decisions. If some of these decisions are, e.g., yes/no-decisions or decisions on integral quantities like cardinalities, then the optimization problem is discrete (as opposed to continuous). For example, your favorite navigation system solves discrete optimization problems whenever you ask for a route, since you can only go this way or that way and not half this way and half that way at the same time. This poster presents, among a brief introduction into the mathematical field, some acadamic and real-world examples for discrete optimization. The main goal is to show how far one can get with this tool today and what has been done by members of the Chair of Economathematics (Wirtschaftsmathematik) at the University of Bayreuth.

- Discrete structures, algorithms, and applicationsHide

-

Discrete structures, algorithms, and applications

Sascha Kurz

The development of digital computers, operating in "discrete" steps and storing data in "discrete" bits, has accelerated research in Discrete Mathematics. Here mathematical structures that can be considered to be "discrete", like the usual integers, rather than "continuous", like the real numbers, are studied. There exists a broad variety of discrete structures like e.g. linear codes, graphs, polyominoes, integral point sets, or voting systems.

Optimizing over discrete structures can have several characteristics. In some cases an optimum can be determined analytically or we can derive some properties of the optimal discrete structures. In other cases we can design algorithms that determine optimal solutions in reasonable time or we can design algorithms that locate good solutions including a worst case guarantee.

The corresponding poster exemplarily highlights results for a variety of different discrete structures and invitesm researchers for discussions about discrete structures and related optimization problems occuring in their projects.

- DiSTARS: Students as Digital Storytellers: STEAM approach to Space ExplorationHide

-

DiSTARS: Students as Digital Storytellers: STEAM approach to Space Exploration

Franz X. Bogner, Catherine Conradty

STEAM education is about engaging students in a multi- and interdisciplinary learning context that values the artistic activities, while breaking down barriers that have traditionally existed between different classes and subjects. This trend reflects a shift in how school disciplines are being viewed. It is driven by a commitment to fostering everyday creativity in students, such that they engage in purposive, imaginative activity generating outcomes that are original and valuable for them. While this movement is being discussed almost explicitly in an education context, its roots are embedded across nearly every industry. A renewed interplay between art, science and technology exists. In many ways, technology is the connective tissue.

DiSTARS tests a synchronized integration of simulating ways in which subjects naturally connect in the real world. Combining scientific inquiry with artistic expression (e.g. visual and performing arts), storytelling, and by using existing digital tools (such as the STORIES storytelling platform) along with AR and VR technology, the project aims to capture the imagination of young students and provide them with one of their early opportunities for digital creative expression. The selected thematic area of study is Space Exploration which despite of the strong gendering of interest in science as the issue of a potential life outside of Earth is interesting for both girls and boys. Students involved in the project work collaboratively in groups to express their visions for the future of space exploration. Each group of students creates a digital story in the form of an e-book (in a 2D or 3D environment) showing how they imagine Mars (or the Moon), the trip, the arrival, the buildings on the planet, the life of humans there. The project focuses at the age range of 10 to 12 years old students (The Erasmus+ project’s lifetime: 2020-2023).

- Distributional Reinforcement LearningHide

-

Distributional Reinforcement Learning

Luchen Li, Aldo Faisal

Distributional Reinforcement Learning maintains the entire probability distribution of the reward-to-go, i.e. the return, providing more learning signals that account for the intrinsic uncertainty associated with policy performance, which may be beneficial for trading off exploration and exploitation and policy learning in general. We first prove that the distributional Bellman operator for state-return distributions is also a contraction in Wasserstein metrics, extending previous work on state-action returns. This enables us to translate successful conventional RL algorithms that are based on state values into distributional RL. We formulate the distributional Bellman operation as an inference-based auto-encoding process that minimises Wasserstein metrics between target/model return distributions. The proposed algorithm, Bayesian Distributional Policy Gradients (BDPG), uses adversarial training in joint-contrastive learning to estimate a variational posterior over a latent variable. Moreover, we can now interpret the return prediction uncertainty as an information gain, from which a new curiosity measure can be obtained to boost exploration efficiency. We demonstrate in a suite of Atari 2600 games and MuJoCo tasks, including well recognised hard-exploration challenges, how BDPG learns generally faster and with higher asymptotic performance than reference distributional RL algorithms.

- ECO2 -SCHOOLS as New European Bauhaus (NEB) LabsHide

-

ECO2 -SCHOOLS as New European Bauhaus (NEB) Labs

Franz X. Bogner, Tessa-Marie Baierl

The Erasmus+ project acronymed NEB-LAB combines the expertise of architects, school principal, community leaders, educators and psychometric experts. It develops within the project’s lifetime (2023-2025) physical pilot sites of schools, universities, and science centers in five European countries (Sweden, France, Greece, Portugal, Ireland) to eco-renovate educational buildings that serve as living labs to promote sustainable citizenship. Within the project frame, the University of Bayreuth assures the assessment work package by measuring the effects on school environments and framing students’ sustainable citizenship. Building on the concept of Open Schooling, the selected pilot sites will develop concrete and replicable climate action plans to be transformed to innovation hubs in their communities, raising citizen awareness activities to facilitate social innovation, promote education and training for sustainability, conducive to competences and positive behaviour for a resource efficient and environmentally respectful energy use. The process is guided and supported by an innovative partnership scheme that brings together unique expertise (including architects and landscape designers, technology and solutions providers, financial bodies and local stakeholders). The project demonstrates that such educational buildings can act as drivers for the development of green neighbourhood “living labs” and guide toward sustainable citizenship. The common feature in all pilot sites will be a by-product of the desire to create a building that inspires forward-looking, inquiry-based learning, and a sense of ownership among students and building stakeholders alike.

- Engineering specific binding pockets for modular peptide binders to generate an alternative for reagent antibodiesHide

-

Engineering specific binding pockets for modular peptide binders to generate an alternative for reagent antibodies

Josef Kynast, Merve Ayyildiz, Jakob Noske, Birte Höcker

Current biomedical research and diagnostics critically depend on detection agents for specific recognition and quantification of protein molecules. Due to shortcomings of state-of-the-art commercial reagent antibodies such as low specificity or cost-efficiency, we work on an alternative recognition system based on a regularized armadillo repeat protein. The project is a collaboration with the groups of Anna Hine (Aston, UK) and Andreas Plückthun (Zürich, CH). We focus on the detection and analysis of interaction patterns to design new binder proteins. Additionally, we try to predict the binding specificity or simulate binding dynamics of those designed protein binders. Here we present our computational approach.

- Explainable Intelligent Systems (EIS)Hide

-

Explainable Intelligent Systems (EIS)

Lena Kästner, Timo Speith, Kevin Baum, Georg Borges, Holger Hermanns, Markus Langer, Eva Schmidt, Ulla Wessels

Artificially intelligent systems are increasingly used to augment or take over tasks previously performed by humans. These include high-stakes tasks such as suggesting which patient to grant a life-saving medical treatment or navigating autonomous cars through dense traffic---a trend raising profound technical, moral, and legal challenges for society.

Against this background, it is imperative that intelligent systems meet central desiderata placed on them by society. They need to be perceivably trustworthy, support competent and responsible decisions, allow for autonomous human agency and for adequate accountability attribution, conform with legal rights, and preserve fundamental moral rights. Our project substantiates the widely accepted claim that meeting these desiderata presupposes human stakeholders to be able to understand intelligent systems’ behavior and that to achieve this, explainability is crucial.

We combine expertise from informatics, law, philosophy, and psychology in a highly interdisciplinary and innovative research agenda to develop a novel ‘explainability-in-context’ (XiC) framework for intelligent systems. The XiC framework provides concrete suggestions regarding the kinds of explanations needed in different contexts and for different stakeholders to meet specific societal desiderata. It will be geared towards guiding future research and policy-making with respect to intelligent systems as well as their embedding in our society. - Fair player pairings at chess tournamentsHide

-

Fair player pairings at chess tournaments

Agnes Cseh, Pascal Führlich, Pascal Lenzner

The Swiss-system tournament format is widely used in competitive games like most e-sports, badminton, and chess. In each round of a Swiss-system tournament, players of similar score are paired against each other. We investigate fairness in this pairing system from two viewpoints.

1) The International Chess Federation (FIDE) imposes a voluminous and complex set of player pairing criteria in Swiss-system chess tournaments and endorses computer programs that are able to calculate the prescribed pairings. The purpose of these formalities is to ensure that players are paired fairly during the tournament and that the final ranking corresponds to the players' true strength order. We contest the official FIDE player pairing routine by presenting alternative pairing rules. These can be enforced by computing maximum weight matchings in a carefully designed graph. We demonstrate by extensive experiments that a tournament format using our mechanism (1) yields fairer pairings in the rounds of the tournament and (2) produces a final ranking that reflects the players' true strengths better than the state-of-the-art FIDE pairing system.

2) An intentional early loss at a Swiss-system tournament might lead to weaker opponents in later rounds and thus to a better final tournament result---a phenomenon known as the Swiss Gambit. We simulate realistic tournaments by employing the official FIDE pairing system for computing the player pairings in each round. We show that even though gambits are widely possible in Swiss-system chess tournaments, profiting from them requires a high degree of predictability of match results. Moreover, even if a Swiss Gambit succeeds, the obtained improvement in the final ranking is limited. Our experiments prove that counting on a Swiss Gambit is indeed a lot more of a risky gambit than a reliable strategy to improve the final rank. - Flexible automated production of oxide short fiber compositesHide

-

Flexible automated production of oxide short fiber composites

Lukas Wagner, Georg Puchas, Dominik Henrich, Stefan Schaffner

In order to enable intuitive on-line robot programming for fiber spray processes, a robot programming system is required which is characterized by its flexibility in programming for the use in small and medium-sized companies. This can be achieved by using editable playback robot programming. In this research project, the editable playback robot programming is applied to a continuous process for the first time in order to enable the robot-supported production of small batches. Thus, a domain expert should be able to operate the robot system without prior programming knowledge, so that he can quickly program a robot path and the associated control of peripheral devices required for a given task. Fundamental extensions are also to be used to investigate possibilities for optimizing robot programs and their applicability within the editable playback robot programming. The fiber spraying process of oxide fiber composites serves as a practical application example, as this material places particularly high demands on the impregnation of fiber bundles and their preservation, for which the fiber spraying process and its automation are particularly suitable. The planned intuitive programming is to be tested as an example on this little-researched composite material, which has great potential for the high temperature application (combustion technology, metallurgy, heat treatment). If successful, the interdisciplinary cooperation between applied computer science and materials engineering provides the framework for the process engineering and materials research of a new class of materials for high-temperature lightweight construction.

- Flow-Design Models for Supply ChainsHide

-

Flow-Design Models for Supply Chains

Dominik Kamp, Jörg Rambau

A main task in supply chain management is to find an efficient placement of safety stocks between the production stages so that end customer demands can be satisfied in time. This becomes particularly complicated in the presence of multiple supply options, since the selection of suppliers influences the internal demands and therefore the stock level requirements to enable a smooth supply process. The so-called Stochastic Guaranteed Service Model with Demand Propagation determines suitable service times and base stocks along a divergent supply chain with additional nearby suppliers, which are able to serve demanded products in stock-out situations. This model is non-linear by design but can be stated as a big-M-linearized mixed integer program. However, the application of established solvers to the original formulation is intractable already for small supply chains. With a novel equivalent formulation based on time-expanded network flows, a significantly better performance can be achieved. Using this approach, even large supply chains are solved to optimality in less time although much more variables and constraints are involved. This makes it possible for the first time to thoroughly investigate optimal configurations of real-world supply chains within a dynamic simulation environment.

- Fluent human-robot teaming for assembly tasksHide

-

Fluent human-robot teaming for assembly tasks

Nico Höllerich

The utilisation of robots has the potential to lower costs or increase production capacities for assembly tasks. However, full automatisation is often not feasible as the robot lacks dexterity. In contrast, purely manual assembly is expensive and tedious. Human-robot teaming promises to combine the advantages of both. Since humans often attribute intelligence to robots, they expect that a robot teaming partner adapts to their preferences. Existing human-robot workspaces, however, do not offer adaption but follow a fixed schedule. Future solutions should overcome this shortcoming. Human and robot should fluently work together and adapt to the preferences of each other. This is possible as humans follow specific patterns (e.g. work from left-to-right, or compose layer-by-layer). Traditional machine learning approaches, such as neural networks, have the required expressiveness to capture and learn those patterns. But they often require large amounts of training data. Our approach avoids this problem by transforming sequences of task steps into a carefully designed feature space. We designed a small neural network specifically for this feature space. Input is a sequence of previous steps and candidates for the next step. Output is a probability distribution over the candidates. That way, the neural network can predict the human’s next step. The result guides the robot decision-making king. The advantage compared to deep learning frameworks is that the network can be trained on the fly while the human is performing the task with the robot. The sequence of previous steps serves as training data for the network. We conducted a user study where human and robot repeatedly compose and decompose a small structure of building blocks. The study shows that our approach leads to overall fluent cooperation. If the robot picks the same step as predicted for the human, fluency drastically decreases – indicating the quality of the prediction.

- Graph Pattern Matching in GQL and SQL/PGQHide

-

Graph Pattern Matching in GQL and SQL/PGQ

Alin Deutsch, Nadime Francis, Alastair Green, Keith Hare, Bei Li, Leonid Libkin, Tobias Lindaaker, Victor Marsault, Wim Martens, Jan Michels, Filip Murlak, Stefan Plantikow, Petra Selmer, Oskar van Rest, Hannes Voigt, Domagoj Vrgoc, Mingxi Wu, Fred Zemke

As graph databases become widespread, JTC1 -- the committee in joint charge of information technology standards for the International Organization for Standardization (ISO), and International Electrotechnical Commission (IEC) -- has approved a project to create GQL, a standard property graph query language. This complements a project to extend SQL with a new part, SQL/PGQ, which specifies how to define graph views over an SQL tabular schema, and to run read-only queries against them.

Both projects have been assigned to the ISO/IEC JTC1 SC32 working group for Database Languages, WG3, which continues to maintain and enhance SQL as a whole. This common responsibility helps enforce a policy that the identical core of both PGQ and GQL is a graph pattern matching sub-language, here termed GPML.

The WG3 design process is also analyzed by an academic working group, part of the Linked Data Benchmark Council (LDBC), whose task is to produce a formal semantics of these graph data languages, which complements their standard specifications.

This paper, written by members of WG3 and LDBC, presents the key elements of the GPML of SQL/PGQ and GQL in advance of the publication of these new standards. - Heterogeneous cell structures in AFM and shear flow simulationsHide

-

Heterogeneous cell structures in AFM and shear flow simulations

Sebastian Wohlrab, Sebastian Müller, Stephan Gekle

In biophysical cell mechanics simulations, the complex inner structure of cells is often simplified as homogeneous material. However, this approach neglects individual properties of the cell’s components, e.g., the significantly stiffer nucleus.

By introducing a stiff inhomogeneity inside our hyperelastic cell, we investigate it during AFM compression and inside shear flow in finite- element and Lattice Boltzmann calculations.

We show that a heterogenous cell exhibits almost identical deformation behavior under load and in flow as compared to a homogeneous cell with equal averaged stiffness, supporting the validity of the homogeneity assumed in both mechanical characterization as well as numerical computations. - High-performance computing for climate applicationsHide

-

High-performance computing for climate applications

Vadym Aizinger, Sara Faghih-Naini

Numerical simulation of climate and its compartments is a key tool for predicting the climate change and quantifying the global and regional impact of various aspects of this change on ecological, economical and social conditions. Dramatically improving the predictive skill of modern climate models requires more sophisticated representations of physical, chemical and biological processes, better numerical methods and substantial breakthroughs in computational efficiency of model codes. Our work covers some of the most promising numerical and computational techniques that hold promise of significantly improving the computational performance of future ocean and climate models.

- How does ChatGPT change our way ouf teaching, learning and examining?Hide

-

How does ChatGPT change our way ouf teaching, learning and examining?

Paul Dölle

ChatGPT can become a tool for our daily work. The tool does not think for us, we can think with the tool. In order for us to be able to do that, we have to understand it, get to know the possibilities and risks and adjust ourselves and the students didactically to it.

The project at ZHL (Center for Teaching and Learning) deals with the effects of automated writing aids on teaching and offers further training opportunities for teachers. - Human-AI Interaction in Writing ToolsHide

-

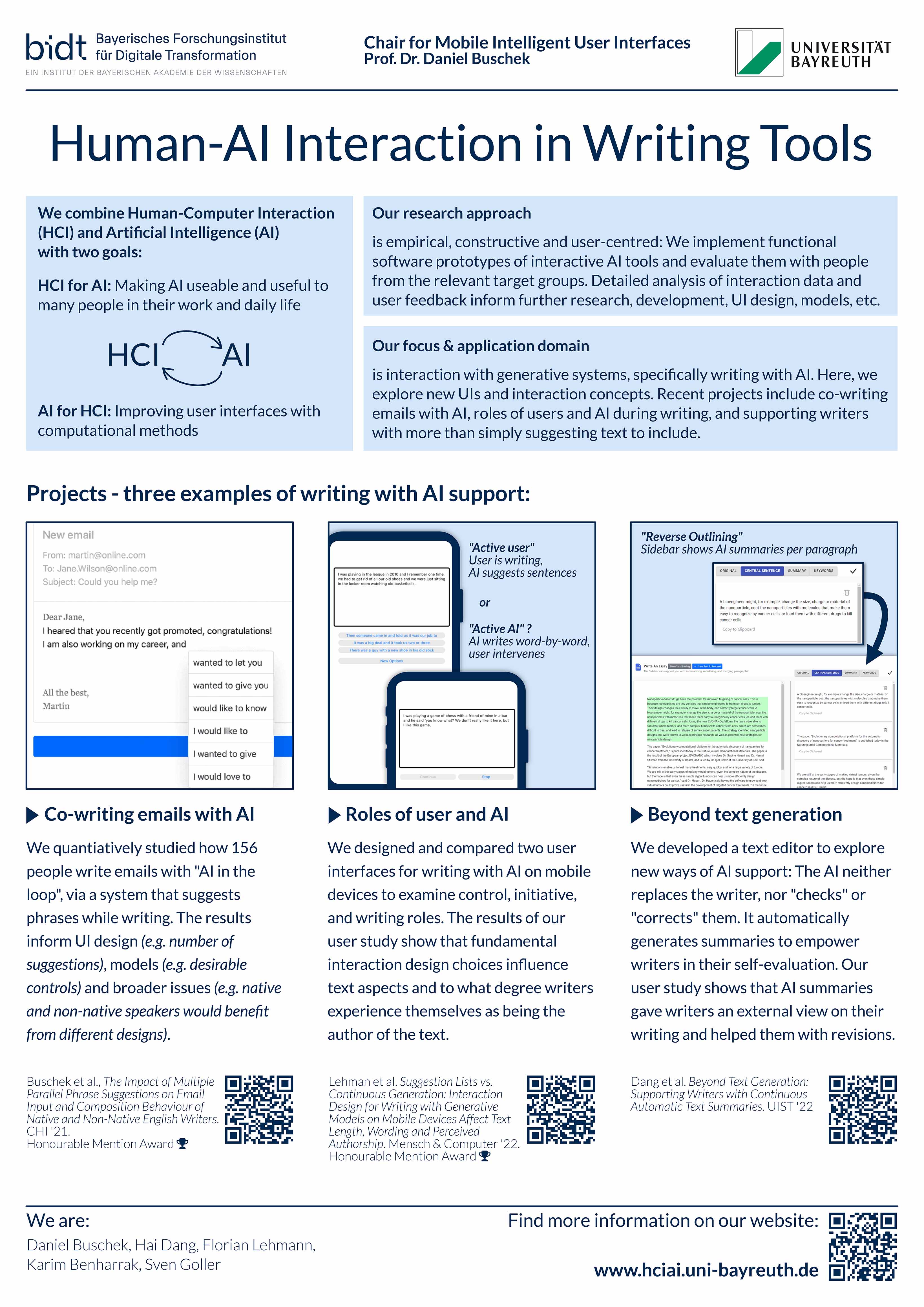

Human-AI Interaction in Writing Tools

Daniel Buschek, Hai Dang, Florian Lehmann

We explore new user interfaces and interaction concepts for writing with AI support, with recent projects such as: 1) The impact of phrase suggestions from a neural language model on user behaviour in email writing for native and non-native English speakers. 2) A comparison of suggestions lists and continuous text generation and its impact on text length, wording, and perceived authorship. 3) A new text editor that provides continuously updated paragraph-wise summaries as margin annotations, using automatic text summarization, to empower users in their self-reflection. More broadly, our work examines opportunities and challenges of designing interactive AI tools with Natural Language Processing capabilities.

- Impact of XAI dose suggestions on the prescriptions of ICU doctorsHide

-

Impact of XAI dose suggestions on the prescriptions of ICU doctors

Myura Nagendran, Anthony Gordon, Aldo Faisal

Background.

Our AI Clinician reinforcement learning agent has entered prospective evaluation. Here we try to understand (i) how much an AI can influence an ICU doctor’s prescribing decision, (ii) how much knowing the distribution of peer actions influences the doctor and (iii) whether or how much an AI explanation influences the doctor’s decision.Methods.

We conducted an experimental human-AI interaction study with 86 ICU doctors using a modified between-subjects design. Doctors were presented for each of 16 trials with a patient case, potential additional information depending on the experiment arm, and then prompted to prescribe continuous values for IV fluid and vasopressor. We used a multi-factorial experimental design with 4 arms. The 4 arms were baseline with no AI or peer human information; peer human clinician scenario showing the probability density function of IV fluid and vasopressor doses prescribed by other doctors; AI scenario; explainable AI (XAI) scenario (feature importance).Results.

Our primary measure was the difference in prescribed dose to the same patient across the 4 different arms. For the same patients, providing clinicians with peer information (B2) did not lead to an overall significant prescription difference compared to baseline (B1). In contrast, providing AI/XAI (B3-4) information led to significant prescription differences compared to baseline for IV fluid. Importantly, the XAI condition (B4) did not lead to a larger shift towards the AI’s recommendation than the AI condition (B3).Discussion.

This study suggests that ICU clinicians are influenceable by dose recommendations. Knowing what peers had done had no significant overall impact on clinical decisions while knowing that the recommendation came from AI did make a measurable impact. However, whether the recommendation came in a “naked” form or garnished with an explanation (here simple feature importance) did not make a substantial difference. - Invasive Mechanical VentilationHide

-

Invasive Mechanical Ventilation

Yuxuan Liu, Aldo Faisal, Padmanabhan Ramnarayan

Invasive mechanical ventilation, a vital supportive therapy for critically ill patients with respiratory failure, is one of the most widely used interventions in admissions to the PICU: more than 60% of the 20,000 children admitted to UK PICUs each year receive breathing support through an invasive ventilator, spanning for 45.3% of the total bed days. Weaning refers to the gradual process of transition from full ventilatory support to spontaneous breathing, including, in most patients, the removal of the endotracheal tube. The dynamic nature of respiratory status means that what might be 'right' at one time point may not be 'right' at another time point. Acknowledging that this dynamic nature results in wide clinical variation in practice, and that it may be possible to augment clinicians' decision-making with computerised decision support, there has been a growing interest in the use of artificial intelligence (AI) in intensive care settings, both for adults and children. However, the current AI landscape is increasingly acknowledged as incomplete, with frequent concerns regarding AI reproducibility and generalisability. More importantly, there are currently no specific AI systems taking special consideration of features that are distinctive to the paediatric population and could effectively optimizing ventilator management in PICUs.

This work aims to develop an AI-based decision support tool that uses available historical

data for patients admitted to three UK paediatric intensive care units (PICUs) to suggest

treatment steps aimed at minimising the duration of ventilation support while maximizing

extubation successful rates. - Isolating motor learning mechanisms through a billiard task in Embodied Virtual RealityHide

-

Isolating motor learning mechanisms through a billiard task in Embodied Virtual Reality

Federico Nardi, Shlomi Haar, Aldo Faisal

Motor learning is driven by error and reward feedback which are considered to be processed by two different mechanisms: error-based and reward-based learning. While those are often isolated in lab based tasks, it is not trivial to dissociate between them in the real-world. In previous works we established the game of pool billiards as a real-world paradigm to study motor learning, tracking the balls on the table to measure task performance, using motion tracking to capture full-body movement and electroencephalography (EEG) to capture brain activity. We demonstrated that during real-world learning in the pool task different individuals use varying contributions of error-based and reward-based learning mechanisms. We then incorporated it in an embodied Virtual Reality (eVR) environment to enable perturbations and visual manipulations.

In the eVR setup, the participant is physically playing pool while visual feedback is provided by the VR headset. Here, we used the eVR pool task to introduce visuomotor rotations and selectively provide error or reward feedback, as was previously done in lab tasks, to study the effects of the forced use of a single learning mechanism on the learning and the brain activity. Each of the 40 participants attended the lab for two sessions to learn one rotation (clockwise or counter-clockwise) with error feedback and the other with reward, with a coherent different visual feedback provided.

Our behavioural results showed a difference between the learning curves with the different feedback, but with both the participants learned to correct for the rotation, while using separate and single learning mechanisms. - JSXGraph - Mathematical visualization in the web browserHide

-

JSXGraph - Mathematical visualization in the web browser

Carsten Miller, Volker Ulm, Alfred Wassermann

A selection of JSXGraph features are: plotting of function graphs and curves, Riemann sums, support of various spline types and Bezier curves, the mathematical subroutines comprise differential equation solvers nonlinear optimization, advanced root finding, symbolic simplification of mathematical expressions, interval arithmetics, projective transformations, path clipping as well as some statistical methods. Further, (dynamic) mathematical typesetting via MathJax or KaTeX and video embedding is supported. Up to now, the focus was on 2D graphics, 3D support has been started recently.

A key feature of JSXGraph is its seamless integration into web pages. Therefore it became an integral part of several e-assessment platforms, e.g. the moodle-based system STACK, which is very popular for e-assessment in "Mathematics for engineering" courses worldwide. The JSXGraph filter for the elearning system Moodle is meanwhile available in the huge Moodle installation "mebis" for all Bavarian schools, as well as in elearning platforms of many German universities, e.g. University of Bayreuth, RWTH Aachen. The JSXGraph development team has been / is part of several EU Erasmus+ projects (COMPASS, ITEMS, Expert, IDIAM).

- LBM Simulations of Raindrop Impacts demonstrate Microplastic Transport from the Ocean into the AtmosphereHide

-

LBM Simulations of Raindrop Impacts demonstrate Microplastic Transport from the Ocean into the Atmosphere

Moritz Lehmann, Fabian Häusl, Stephan Gekle